0. Logistical Info

- Section date: 9/20

- Associated lectures: 9/12, 9/14

- Associated pset: Pset 2, due 9/22

- Office hours on 9/20 from 7-9pm at Quincy Dining Hall

- Remember to fill out the attendance form

- Scroll to section 5 for a concise content summary.

0.1 Summary + Practice Problem PDFs

Summary + Practice Problems PDF

Practice Problem Solutions PDF

1. Brushing up on the definition of probability

We’ll restate the axioms for the general definition of probability:

Definition of probability:

There are just two axioms (rules that probabilities have to follow):

- $P(S) = 1, P(\emptyset) = 0.$

- If events $A_1, A_2, \ldots$ are disjoint, then $$ P\left( \bigcup_{j=1}^\infty A_j\right) = \sum_{j=1}^\infty P(A_j). $$

In other words, if $A_1, A_2, \ldots$ partition some event $B$, then $P(B) = \sum_{j=1}^\infty P(A_j)$.

Tips for calculating probabilities:

- Define events for every aspect of the problem (e.g., “$A$ = the event that it rains tomorrow, $B$ = the event that it rained today”)

- Write out the probabilities that you are given in the problem using notation (e.g., “$P(A|B) = 1/2$, $P(B) = 1/4$).

- Write the probability that you want to calculate using notation (e.g., we want to calculate the unconditional probability that it rains tomorrow, $P(A)$).

- Figure out how the tools we have learned allow you to utilize the probabiliies that you do know (step 2) to calculate the probabilities that you don’t know (step 3).

There are some important results that follow:

- Probability of a complement: If $A$ is an event a sample space $S$, $$ P(A) = 1 - P(A^c). $$ Concisely, the probability of an event occuring is $1$ minus the probability of the event not occuring.

- Probability of a union: For events $A$, $B$, we have $$ P(A \cup B) = P(A) + P(B) - P(A \cap B). $$ It’s also useful to “disjointify” $A \cup B$ into a partition ($A \cup B^c, A \cap B, A^c \cup B$) which allows us to use the second axiom and get $$ P(A \cup B) = P(A \cup B^c) + P(A \cap B) + P(A^c \cup B). $$

- Principle of Inclusion-Exclusion (PIE): this is a general formula for the probability of the union of $n$ events

\begin{align*}

P(\bigcup_{i=1}^n A_i) &= \sum_i P(A_i) - \sum_{i < j} P(A_i \cap A_j)\\

&+ \sum_{i < j < k} P(A_i \cap A_j \cap A_k) - \cdots + (-1)^{n+1} P(\bigcap_{i=1}^n A_i).

\end{align*}

Note that the formula for the probability of the union of two events is the $n=2$ case of PIE.

A potential workflow (that you saw on Pset 1) for the probability of an intersection, $P(A_1 \cap \cdots \cap A_n)$, is to- Use complementary counting and DeMorgan’s law (in that order) to turn the intersection into a union: \begin{align*} P(A_1 \cap \cdots \cap A_n) &= 1 - P((A_1 \cap \cdots \cap A_n)^c)\\ &= 1 - P(A_1^c \cup \cdots \cup A_n^c) \end{align*}

- Apply PIE to the union $P(A_1^c \cup \cdots \cup A_n^c)$

2. Conditional Probability

Notation note:

We will start writing $P(A \cap B)$ as $P(A, B)$ (i.e., commas between events and intersections are equivalent).

Conditional probability:

If $A$ and $B$ are two events, then the probability that $A$ occurs conditional on the fact that $B$ occurs (or given that $B$ occurs) is notated as $P(A|B)$ and equals $$ P(A|B) = \frac{P(A, B)}{P(B)}. $$ All conditions go to the right of the bar symbol $|$.

We read $P(A|B)$ is the “probability of $A$ given $B$” or “probability of $A$ conditioned on $B$” Intuitively, we can consider that if we know $B$ occurs, $B$ basically becomes our new sample space, so we take the probability that both $A$ and $B$ occurs, $P(A, B)$, and rescale it by the probability that $B$ occurs, $P(B)$.

We’re also quick to note that conditional probabilities are the same as “normal” probabilities — in fact, all probabilities can be considered conditional, we just treat some conditions more implicitly than others since they are more obvious/always involved to the problem. We’ll use extra conditioning to refer to problems where some conditions are always present (i.e., we never want to/don’t know how to calculate the probability of those conditionns). For example, to calculate the probability that it rains tomorrow ($A$) given that it rained today $B$, we would right $P(A|B)$. However, we are implicitly conditioning on a lot of things: that the world exists tomorrow ($W$), that I will be on Harvard campus when I check whether it rains $H$, etc. So we could incorporate these extra conditions into our problem to write the definition of conditional probability with extra conditioning: $$ P(A|B, H, W) = \frac{P(A, B | H, W)}{P(B | H, W)} $$ As you can see, when we want certain events to be extra conditions, they are conditions in every related probability we calculate. Each time we apply a formula for conditional probability, we have to choose whether to treat the each condition like $B$ (free to move around) or like $H$ (extra conditioning/always a condition).

3. Tools using Conditional Probability

3.1 Probability of an Intersection

For events $A, B$ we can rearrange the definition of conditional probability to find the probability of their intersection: $$ P(A, B) = P(A) P(B | A) = P(B) P(A | B). $$ This works for intersections of $n$ events: $$ P(A_1, A_2, \ldots, A_n) = P(A_{i_1}) P(A_{i_2} | A_{i_1}) \cdots P(A_{i_n} | A_{i_1}, A_{i_2}, \ldots A_{i_{n-1}}) $$ where $i_1, i_2, \ldots, i_n$ is a permutation of $1, 2, \ldots, n$. Note that we can choose the conditions in any order we want, and pick the order to our best convenience — for example, if you only know the unconditional probability of $A_8$, you should let $i_1 = 8$.

The probability of an intersection with extra conditioning is $$ P(A, B | C) = P(A | C) P(B | A, C) = P(B | C) P(A | B, C). $$

3.2 Law of Total Probability (LOTP)

The law of total probability (LOTP) is a clever rephrasing of the second axiom of probability (the probability of a partition is the sum of probabilities): if $A_1, A_2, \ldots, A_n$ partition the sample space, then

\begin{align*}

P(B) &= P(B, A_1) + P(B, A_2) + \cdots + P(B, A_n).

\end{align*}

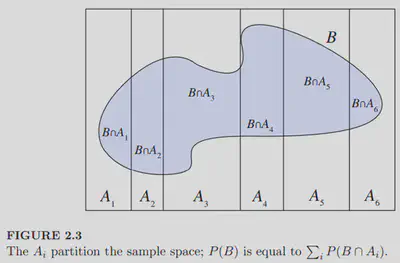

See Figure 2.3 from Blizstein & Hwang below for a visualization:

We usually break down each of the terms using the result for the probability of an intersection section 3.1 $$ P(B) = P(B | A_1) P(A_1) + P(B | A_2) P(A_2) + \cdots + P(B | A_n) P(A_n) $$ When you only know $B$ in terms of conditional probabilities, we can make those conditional probabilities appear through LOTP. As Joe likes to say, condition on what you wish you knew:

LOTP with extra conditioning is $$ P(B | C) = P(B | A_1, C) P(A_1 | C) + P(B | A_2, C) P(A_2 | C) + \cdots + P(B | A_n, C) P(A_n| C) $$

3.3 Bayes’ Rule

Bayes’ Rule is also a result of the formula for the probability of an intersection: $$ P(B|A) = \frac{P(A|B) P(B)}{P(A)}. $$ The denominator often gets expanded out using LOTP (section 3.2), often with a partition containing $B$ like $$ P(B|A) = \frac{P(A|B) P(B)}{P(A|B) P(B) + P(A|B^c) P(B^c)}. $$ Bayes’ rule is used in situations where we don’t know how to calculate the probability of $B$ given $A$, $P(B|A)$, but know how to calculate the probability of $A$ given $B$, $P(A|B)$.

Bayes’ rule with extra conditioning is $$ P(B | A, C) = \frac{P(A | B, C) P(B | C)}{P(A | B, C) P(B|C) + P(A|B^c, C) P(B^c|C)} $$

4. Independence

Independence for two events

Two events $A, B$ are independent if $$ P(A, B) = P(A) P(B) $$

For $P(A), P(B) > 0$, this either of the following as well: \begin{align*} P(A|B) &= P(A) \text{ or }\ P(B|A) &= P(B) \end{align*}

Intuitively, independence means that information about $A$ (e.g., knowing whether $A$ occurs) gives us no information about $B$. Some

- Note that independence goes both ways — if $A$ is independent of $B$, then $B$ is independent of $A$.

- If $A$ is independent of $B$, then $A$ is independent of $B^c$ and $A^c$ is independent of $B^c$.

Independence for many events

A group of events $A_1, A_2, \ldots, A_n$, are independent if for any subset $A_{i_1}, A_{i_2}, \ldots, A_{i_k}$, $$ P(A_{i_1}, A_{i_2}, \ldots, A_{i_k}) = P(A_{i_1}) P(A_{i_2}) \cdots P(A_{i_k}) $$ Note that pairwise independence (e.g., showing that $P(A_i, A_j) = P(A_i) P(A_j)$ for all $i,j$) is required, but not enough, to show that joint independence of all of the sets.

4.1 Conditional Independence

Conditional independence follows a similar formula: $A, B$ are conditionally independent given $C$ if $$ P(A, B | C) = P(A|C) P(B|C). $$ You can see how this is analogous to extra conditioning for the other results. Conditional independence of many events is similarly defined.

5. Summary

Notation note: see that we use commas and intersections interchangeably (i.e., $P(A, B, C) = P(A \cap B \cap C)$).

Tips for calculating probabilities:

- Define events for every aspect of the problem (e.g., “$A$ = the event that it rains tomorrow, $B$ = the event that it rained today”)

- Write out the probabilities that you are given in the problem using notation (e.g., “$P(A|B) = 1/2$, $P(B) = 1/4$).

- Write the probability that you want to calculate using notation (e.g., we want to calculate the unconditional probability that it rains tomorrow, $P(A)$).

- Figure out how the tools we have learned allow you to utilize the probabiliies that you do know (step 2) to calculate the probabilities that you don’t know (step 3).

5.1 Definition of Probability

Axioms of probability:

- With sample space $S$, \begin{align*} P(S) &= 1\\ P(\emptyset) &= 0. \end{align*}

- For $A_1, A_2, \ldots, $ that partition $B$ (this can be finite or infinite), \begin{align*} P(B) = \sum_{j=1}^\infty P(A_j) \end{align*}

Probability of a complement: For event $A$, $$ P(A) = 1 - P(A^c) $$

Probability of a union: For events $A$ and $B$, \begin{align*} P(A \cup B) &= P(A) + P(B) - P(A \cap B)\\ &= P(A \cap B^c) + P(B \cap A^c) + P(A \cap B) \end{align*}

Principle of Inclusion-Exclusion: For events $A_1, \ldots, A_n$, \begin{align*} P(\bigcup_{i=1}^n A_i) &= \sum_i P(A_i) - \sum_{i < j} P(A_i \cap A_j)\\ &+ \sum_{i < j < k} P(A_i \cap A_j \cap A_k) - \cdots + (-1)^{n+1} P(\bigcap_{i=1}^n A_i). \end{align*}

5.2 Conditional Probability

Conditional probability: For events $A$ and $B$, the probability of $A$ given $B$ (i.e., given that $B$ occured) is \begin{align*} P(A|B) = \frac{P(A \cap B)}{P(B)}. \end{align*} …with extra conditioning: \begin{align*} P(A|B, C) = \frac{P(A \cap B | C)}{P(B | C)}. \end{align*}

5.3 Conditional Probability Tools

First-step analysis: If you ever need to solve a problem involving a sequence of things (like a game with many turns, or a random walk, or so on) and are stuck, try first-step analysis: conditioning what happens after the first step. You’ll often be able to get a recursive equation that is easier to solve.

Probability of an intersection: \begin{align*} P(A_1, A_2, \ldots A_n) &= P(A_1) P(A_2 | A_1) \cdots P(A_n | A_1, \ldots, A_{n-1})\\ &= P(A_n) P(A_{n-1} | A_n) \cdots P(A_1 | A_2, \ldots, A_n), \\ &= [\text{chaining in any order that is convenient for you}]. \end{align*} …with extra conditioning: \begin{align*} P(A_1, A_2, \ldots A_n | C) &= P(A_1 | C) P(A_2 | A_1, C) \cdots P(A_n | A_1, \ldots, A_{n-1}, C) \end{align*} Law of Total Probability (LOTP): for events $A_1, A_2, \ldots, A_n$ that partition $S$, we can find $P(B)$ by \begin{align*} P(B) &= P(B, A_1) + P(B, A_2) + \cdots + P(B, A_n) \\ &= P(B | A_1) P(A_1) + P(B | A_2) P(A_2) + \cdots + P(B | A_n) P(A_n). \end{align*} We pick $A_1, A_2, \ldots, A_n$ to “condition on what we wish we knew.” These are situations where you don’t know $P(B)$, but you know $P(B | A_1), (B | A_2)$, etc.

…with extra conditioning: \begin{align*} P(B|C) &= P(B, A_1|C) + P(B, A_2|C) + \cdots + P(B, A_n|C) \\ &= P(B | A_1,C) P(A_1|C) + P(B | A_2,C) P(A_2|C) + \cdots + P(B | A_n,C) P(A_n|C). \end{align*} Bayes’ Rule: for events $A, B$, if we want to calculate $P(B|A)$ but can only know how to calculate $P(A|B)$, \begin{align*} P(B|A) &= \frac{P(A|B) P(B)}{P(A)}\\ &= \frac{P(A|B) P(B)}{P(A|B) P(B) + P(A|B^c) P(B^c)}, \end{align*} where we commonly expand the denominator using the Law of Total Probability (LOTP).

…with extra conditioning: \begin{align*} P(B|A, C) &= \frac{P(A|B, C) P(B|C)}{P(A|C)} \\ &= \frac{P(A|B, C) P(B|C)}{P(A|B, C) P(B|C) + P(A|B^c, C) P(B^c |C)} \end{align*}

5.4 Independence

Independence: $A, B$ are defined to be independent if \begin{align*} P(A, B) = P(A) P(B). \end{align*} Note that if $A, B$ are independent, then so are $A, B^c$ and $A^c, B$, and $A^c, B^c$; basically any functions of $A$ and $B$ are independent.

VERY IMPORTANT: Disjointness and independence are not the same thing, and disjoint events are in fact usually independent.

A set of events $A_1, A_2, \ldots, A_n$ is independent if any subset of the events $A_{j_1}, \ldots, A_{j_k}$ follows the equation. \begin{align*} P(A_{j_1}, \ldots, A_{j_k}) &= P(A_{j_1}) \cdots P(A_{j_k}). \end{align*} Basically, for any combination of independent events, we should be able to factor out the probabilities.

Conditional independence: \begin{align*} P(A, B | C) = P(A|C) P(B|C). \end{align*} VERY IMPORTANT: Independence and conditional independence are not the same/do not imply each other. There is no guarantee that independent events are conditionally independent, or vice versa.